Summary

Technological advancements in the field of sensors and their integration into a comprehensive situational real-time picture have revolutionised ISR capabilities. Given the vital role played by sensor fusion in the modern battlefield, a whole-of-nation approach is essential to achieve Atmanirbharta in these aspects.

Introduction

The act of merging data from various sensors into a single, more accurate representation of the situation (user-friendly output like monitor/screen) is widely known as sensor fusion. Operation Neptune Spear, the CIA operation that eventually led to the death of Osama bin Laden, showcased the power of sensors and its integration in precision operations in a contemporary scenario. This operation involved multiple intelligence agencies combining inputs received from satellite technology, signals intelligence (SIGINT), human intelligence operators (HUMINT) and other sophisticated ISR (Intelligence, Surveillance & Reconnaissance) techniques.

The integration of these various surveillance systems and methods into a tight cohesive intelligence-gathering operation, directly monitored by the White House, enabled the analysts to build a single picture of the entire compound and its adjoining areas. Due to the exploitation of technology, CIA could also monitor any changes or developments in real-time and ultimately analyse the necessary synthesised inputs to plan and execute the successful operation that led to Osama bin Laden’s neutralisation.

This multi-layered approach underlined the importance of leveraging diverse assets across multiple domains and capabilities in modern ISR operations, thus highlighting the effectiveness of integrating technology to achieve sensor fusion. All these capabilities were also superimposed with traditional HUMINT techniques and human interpretation to achieve strategic objectives.

Sensor Fusion Layers

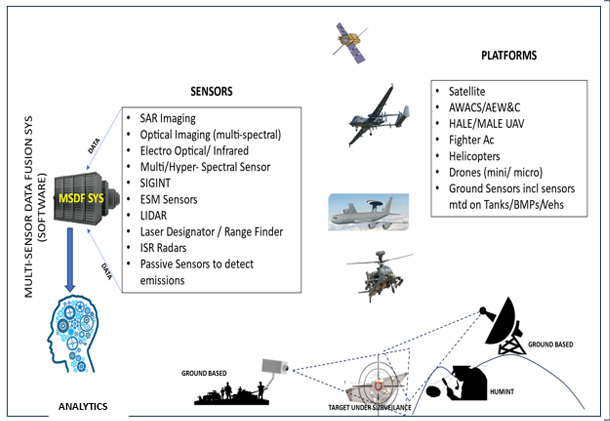

A basic example of sensor fusion is the car cameras mounted on various angles in a modern car. These cameras take 360-degree picture and the display at the driver’s end gives a single situational picture of the same surrounding by merging all the data captured by the cameras making the driver situationally aware without looking at all the pictures individually. This saves time, effort and is easy for comprehension and therefore easy for the driver in decision-making.1 There are various layers of platforms with sensors available for ground-based operations.

Satellite Imagery

Satellites are capable of providing imagery in real-time with complete clarity which requires limited processing. Cartosat3, launched in November 2019 by the Indian Space Research Organisation (ISRO) has a ground resolution of 0.25 m with 16 km swath. This ensures that the satellite can pick out an object that is just 25 cm in length in an area 16 km in width.2

HALE/MALE Class of RPAS (Remotely Piloted Aircraft System)

The modern HALE/MALE class of aircraft are extremely high powered that uses optical, infrared and radar-based sensors, which have the flexibility to look into targets with precision and extreme clarity. The platform carries the Multi-Spectral Targeting System, which integrates the visual sensors namely infrared sensor, colour-monochrome daylight TV camera and laser illuminator to produce full vision motion video fusing all the mentioned inputs. In addition, the SAR (Synthetic Aperture Radar) on board these platforms further enhance the situational awareness of the operators even in conditions of DVE (depleted visual environment—poor visibility condition).

Aerial Platforms (Fixed Wing and Helicopters)

Helicopters and fixed wing aircraft (transport and fighters) commonly utilise a range of sensors for ISR data collection. These include electro optical/infrared cameras for visual surveillance, radar systems for detecting and tracking objects, signal equipment for interception and analysing electronic communication and SAR for high resolution imaging. Additionally, some platforms like AEW&C (Airborne Early Warning and Control) which are primarily equipped for command and control of battlespace in aerial engagements may employ specialised sensors like hyper spectral imaging or LIDAR for the precise terrain mapping. Merging all these data provided by multiple sensors gives a comprehensive situational awareness to the commanders on ground for furtherance of ground operations.

Ground Based Tactical Sensors

Modern ground forces employ a diverse array of sensor technologies to enhance their situational awareness in the TBA (Tactical Battlefield Area). From the Long-Range Reconnaissance and Observation System (LORROS) to sound ranging equipment, Thermal Imaging Integrated Observation equipment (TIIOE), Weapon Locating Radars and Hand-Held Thermal Imaging (TI) sights, these sensors provide a comprehensive and clear view of the operational environment.

While LORROS with its high-resolution precise imagery and long-range capabilities, enables ground commanders to monitor distant areas with precision, sound ranging equipment detects and triangulates enemy artillery fire, providing crucial information for counter artillery threats. Additionally, TI sights offer the advantage of thermal imaging, enabling troops to detect concealed targets and navigate effectively during low-light situations or adverse weather conditions. By integrating these sensor technologies, ground forces significantly bolster their ability to detect, identify and respond to threats swiftly and decisively, thereby ensuring operational success with minimal risk.

Figure 1. Intricacy of Sensor Fusion

Source: Author’s own

Analytics

Analytics can play an important role in ISR, since sensor fusion demands processing, analysing and interpreting data from all these diverse sources/platforms as mentioned above. Through software engineer designed sophisticated algorithms and machine learning techniques, analytics enable not only extraction of actionable intelligence, but also identification of anomalies and prediction of future events through trends/patterns. This enhanced situational awareness supports in decision-making processes, ensuring almost real-time and effective responses to evolving threats and challenges. Data is the new gold and to ensure that data is being put to good use, you need good analytics professionals and software tools. The combination of these two will further bring about the future revolution in sensor fusion in the field of ISR.

Data Links: Integrator & Networking System

Data-link is a tool to enhance situational-awareness of any operator in the battlefield. It is a multi-dimensional tool allowing exchange of essential information between sensors, shooters, battlefield commanders and force-multipliers with an overall aim of shortening the OODA loop and enhancing battlefield transparency. Data-link can be considered as the foundation for Network Centric Warfare (NCW). The significance and role of an operational datalink for integrating various sensors deployed across multiple platforms, such as satellites, manned aircraft, UAVs, helicopters and ground-based ISR systems cannot be overstated in contemporary military operations both in conventional and grey zone domain.

A robust secure datalink not only serves as the backbone for real-time communication and data exchange, but also acts as the bridge or interface between disparate sensor systems. This enables seamless coordination and real-time collaboration among assets distributed across the TBA (Tactical Battlefield Area). By facilitating the transmission of sensor data from satellites in orbit to low flying helicopters, operating in proximity to the target area, an operational datalink ensures that commanders have access to a real-time operational picture in a comprehensive manner. This interconnectedness enhances situational awareness which finally allows decision-makers to monitor developments in real-time, track enemy movements and promptly identify emerging threats to be engaged.

An effective operational datalink should enable integration of sensor data from multiple platforms into a unified coherent picture, available on a monitor/display system, transcending individual platform’s limitations and thereby maximising the collective capabilities of the sensor suite/network. For instance, by fusing data from satellite imagery with on-board sensors on helicopters like electro-optical equipment, decision-makers gain a multi-dimensional perspective of the battlefield or specific target area.

The resultant picture is actually the combination of the high-altitude reconnaissance capability of satellites with the agility and responsiveness of helicopter-based surveillance. This kind of synergy enhances the effectiveness of reconnaissance, surveillance and target acquisition missions, providing actionable intelligence to ground forces and enhancing their operational effectiveness. Furthermore, by enabling timely and secure data sharing among various platforms, operational datalink facilitates improved connectedness which not only streamline decision-making, but also enhance the effectiveness of joint operations, enabling the ground forces to respond swiftly to evolving threats and engage targets with precision, ultimately increasing the overall combat capabilities in the TBA.

Challenges

As the operational environment becomes dynamic and large scale (e.g., Tactical Battle Area & Multi Domain Operations), the demands of ISR will increase manifold and a similar precision response may not be feasible even for the strongest military nation in the world. When the layers of sensors increase and the data type differs with various sensors (giving out outputs/deliverables in various different data sets/file type), complications emerge. Merging or synthesising these numerous data sets and then infusing it into a single situational picture is the challenge that modern-day army has to face. Integrating different sensors into a single display mode/ screen or operational picture offers significant advantages in enhancing commanders’ situational awareness during military operations.

However, this integration process is not without its share of complications and compatibility issue. The biggest challenge lies in harmonising data from disparate sources, each with its own algorithm and formats, resolutions and update rates. Ensuring compatibility and uniformity across these inputs requires sophisticated data processing software and fusion techniques, which can be resource-intensive and prone to syntax errors (common mistake in coding) and misinterpretations.

Moreover, the sheer volume of data/information generated by multiple sensors from multiple platforms can overwhelm decision-makers, leading to information overload and potentially harming decision-making. Additionally, variations in sensor accuracy and coverage may introduce uncertainties or even blind spots in the operational picture, undermining decision-maker’s confidence in the situational picture provided. It is also pertinent to mention here that the sensors being integrated to GPS are also prone to all the errors of GPS including clock error and other atmospheric biases (very common in GPS based systems).

Furthermore, cybersecurity concerns too arise as integrating diverse sensors on board various platforms increases the attack surface available to the adversary for potential cyber-attack. This therefore necessitates robust cyber-security measures to safeguard sensitive information data and systems. Addressing these complications requires not only technological advancements (primarily IT-enabled) but also effective trained manpower, procedural guidelines and SOPs in place along with organisational structures to support and optimise the utilisation of integrated sensor capabilities, while mitigating associated risks.

Going Forward

Military sensor fusion market is set to grow to US$ 921M by 2030.3 Major factors driving the growth of the market includes increasing demand for information from battlefield owing to the diverse and unconventional threats from enemies. Advancements in sensor fusion technologies has led to the development of new applications other than traditional ground target recognition and actionable intelligence at target end. New applications such as military flight planning, data logistics, air tasking orders and others are now focussed at sensor fusion. The most productive areas in the field of military sensor fusion are the deployment of fused sensor technology in AI-enabled weapon systems and depleted vision/night vision weapon systems, NBC detection systems and strategic surveillance systems.4

In the Indian context, the focus of Atmanirbharta in defence acquisition accordingly should now orient towards this domain and reliance on foreign technology needs to be shelved. This would require a huge coordinated effort as the sensors are being designed and developed by various OEMs (original equipment manufacturers) both from India and abroad. To ensure an indigenised software that merges the output of multiple sensors from multiple OEMs, a focussed whole-of-nation effort would be required that will involve acquisition organisation to ensure rightful access to equipment designs and if possible, transfer of technology of sensors procured from foreign OEMs and a secure indigenous ODL (operational datalink) with the capability to network the multiple platforms and also ensure secure communication/data exchange.

In the realm of ISR, technological advancements have ushered in an era of unprecedented data collection and capabilities. However, amidst this technological prowess, it is critical not to overlook the enduring significance of HUMINT. The indispensability of HUMINT in ISR was reiterated on 7 October 2023, when HAMAS could infiltrate into Israeli territory despite the large number of sensors deployed by Israel Defence Forces (IDF) all along the border fence with Gaza.

HUMINT or Spycraft, often referred to as the oldest form of intelligence gathering, involves obtaining information through human sources, mostly intelligence operators. In spite of proliferation of sophisticated surveillance technologies, HUMINT still remains unparalleled in its ability to provide context, nuance and the human factor (human mind) analysis crucial for understanding complex situations which requires a cognitive application which is difficult to be achieved through technology and AI.

The primary reasons why HUMINT stands out amidst a plethora of sensors is its approach to adaptability. While technology can be cutting-edge and versatile, it often lacks the cognitive flexibility and intuition inherent in human mind. HUMINT operatives possess the unique ability to assess subtle cues, build rapport in adversary camp/population and navigate intricate social dynamics and mindset, allowing them to extract valuable insights that will evade even the most sophisticated contemporary surveillance or intelligence collection sensors/systems.

Furthermore, HUMINT excels in situations where technological solutions falter or are unable to penetrate, such as in the realm of human intentions, levels of motivations and other intangibles like popular sentiments. Understanding the ‘why’ behind actions is often as crucial as knowing the ‘what’ or ‘how’. Human sources can provide invaluable insights into the minds of adversaries, enabling decision-makers to anticipate their moves, counter threats effectively, and formulate strategic responses.

Therefore, while we focus on sensor fusion for effective comprehensive ISR, encompassing sensors at all levels and multiple platforms, we should also remain focussed on the most important sensor and its processor available to us, i.e., the human sensory organs and human mind. Integrating HUMINT into the network of sensors is the least complicated as it doesn’t require any complex algorithm integration nor any complicated architecture. It only requires constant focus and monitoring both by the operative on the task and his handler. This requires adequate training of the operators to identify the critical situations and the ability to transmit the inputs/intelligence in real-time. The gist is that HUMINT needs to be superimposed over all the other sensor inputs/feedback prior to taking decisions.

Conclusion

While technological advancements in the field of sensors and its integration into a comprehensive situational real-time picture have revolutionised ISR capabilities, the indispensability of Human Intelligence cannot be overstated. In an operational environment, where adversaries constantly evolve methods to counter detection, HUMINT remains the gold standard of intelligence operations, offering invaluable deep analysis, intuitive adaptability and insight. As we harness the power of technological might and enmesh various sensors to achieve a transparent battlefield, it is important that we do not forget the enduring value of the human factor in the pursuit of national security and strategic advantage.

Views expressed are of the author and do not necessarily reflect the views of the Manohar Parrikar IDSA or of the Government of India.

- 1. Bosch Mobility Manual on Multi-Camera System.

- 2. Sandhya Ramesh, “Cartosat-3 Images are so clear that you can tell a truck from a car, read road markings”, The Print, 31 January 2020.

- 3. Lisa Diagle, “Military Embedded Systems Market Report 2020–2030”, Report, Visiongain, 22 June 2020.

- 4. Rajesh Uppal, “Military Sensor Fusion Technology Enhances Situational Awareness of Air, Sea and Space-based Platforms and Remote Intelligence”,International Defence, Security & Technology, 29 June 2021.